Complexity: The Next Big Thing In Physics

- JYP Admin

- Oct 8, 2024

- 42 min read

Updated: Oct 15, 2024

Author: Arpan Dey

“The beauty of physics lies in the extent to which seemingly complex and unrelated phenomena can be explained and correlated through a high level of abstraction by a set of laws which are amazing in their simplicity.” - Melvin Schwartz

Abstract

This article is about the physics behind complex systems and recent developments in this emerging field, in particular, assembly theory [1]. Complexity is undoubtedly an exciting topic with wide-ranging applications. Some researchers claim that the study of complex systems is poised to bring a paradigm shift in the physics landscape, and might pave the path to the solution of many persisting and perplexing mysteries in physics, and perhaps all of science. We begin with a discussion on the challenge of scientifically defining complexity. Then we discuss assembly theory and how it explains the evolution of complex systems. We then talk about some features most complex systems are found to have in common, like emergence and self-organization, as well as their significance. And finally, there is some further discussion on the emergence of ordered complexity from randomness.

Defining complexity

When we think about the future of physics, most of us inevitably think of the theory of everything, or the unified field theory [2] - the holy grail of physics. However, the next big thing in physics might not be the discovery of a unified field theory, but a better understanding of complexity [3]. Progress in theoretical physics has stalled in the last few decades. The last significant achievement was the discovery and partial verification of the Standard Model of elementary particles [4]. Since then, theoretical physicists have been engaged in a seemingly futile battle to unify general relativity with the other three forces of Nature, described by quantum field theory [5]. There have been numerous theories like string theory [6, 7] and loop quantum gravity [8]. However, so far none of these theories have been able to satisfactorily answer all questions or garner enough experimental support to be taken seriously as a scientific theory. This has led some physicists to wonder whether it is even possible to unify gravity with quantum mechanics. Although progress in theoretical physics has been discouraging, scientists have been making huge progress in the fields of complexity research, consciousness research, emergence, chaos theory, artificial intelligence etc. [9]

First of all, we need an objective definition of complexity. A point to be noted here is that we are not trying to classify systems into two categories: complex systems and simple systems. Although a complicated electronic circuit may appear simple next to a human brain, it is complex in its own right. What we are looking for is a measure of complexity. Every system will have a particular measure of complexity. Of course, this measure should be lower for an electronic circuit - no matter how complex - than for a human brain. Anyway, so what is complexity? Of course, it is a measure of how complex something or some phenomenon is. Or how non-simple it is. Or how difficult it is to understand, describe and reproduce. But we are looking for a quantitative definition of complexity, not a qualitative one. This is where things get difficult. There are many ways to define complexity. And since complexity is a relatively new and less-understood topic, there is no general consensus about the best way to define complexity quantitatively [9, 10]. What makes things even more difficult is the fact that complexity is of significance in many disciplines, like physics, mathematics, computer science and biology. Physicists are interested in a particular aspect of complexity and are seeking to define it accordingly, but computer scientists are probably looking for a different definition [10].

Physicists want to define complexity quantitatively in such a way that we can use it to formulate new laws that elegantly explains the workings of complex systems. One way to think of complexity is to think about the system’s predictability [3]. The more unpredictable the system is, the more its complexity. A human is more complex than a rock simply because it is much more difficult to predict a human’s actions than a rock’s. If we know how the material the rock is made of behaves, and if we know the conditions of the surrounding environment the rock is kept in, we can predict the behavior of the rock. Of course, it is not simple and surely not possible in practice, but it is much simpler than predicting the behavior of a human. To do the latter, we need to have a detailed knowledge of the precise neural circuitry in the brain of that human, a detailed knowledge of biology and human anatomy in general, a detailed knowledge of the surrounding conditions and a lot of other things.

Probably the most popular measure of complexity is algorithmic complexity [11]. It is simply the length of the simplest computer program that can completely describe the system we are trying to measure the complexity of. However, this is not of much use to physicists. This is due to the fact that everything - from an atom to a galaxy - is made of the same elementary particles (fermions) and they interact via the same elementary particles (bosons) following the same laws of physics, and the law of gravitational interaction is also exactly the same for every object experiencing gravitational force. So basically, the same theoretical framework or the same mathematics describes everything in this universe - the exact same algorithm. So, algorithmic complexity does not serve the purpose of the physicist [12].

But what is it that separates a human from a rock? An obvious answer could be the number of constituent particles, but surely not the constituent particles, because every element is made of molecules, every molecule is made of atoms, and every atom is made of electrons, protons and neutrons (or, if we go further, electrons and quarks) - the same elementary particles. Two crucial aspects of the system we are missing out on are the arrangement of the constituent atoms and the way they interact among one another. Note that a complex arrangement inevitably implies more number of constituent particles, because the number of possible arrangements is constrained if the number of particles is very less. The particles in the rock are arranged differently (and in a much simpler fashion) than in a human. So, it seems that some measure of structural complexity would be more interesting to us than algorithmic complexity [12]. However, we are still missing something here. Although we take into account the structure of the system, we completely ignore the way the system was assembled. There are many ways to assemble a complex system. In the end, all of these assembly processes will result in the exact same system structurally. But some of these assembly processes will be simpler and quicker than others. So, in some sense, the systems assembled according to the simpler and quicker assembly processes are less complex than the other systems, simply because it is easier to build them. This problem is addressed by a novel and promising theory - assembly theory [1].

Assembly theory and the evolution of complex systems

Assembly theory is a recent, promising and general theory about the evolution of complex systems, with a wide range of applicability. Assembly theory measures complexity based on how difficult it is to assemble the system. To assemble big and complex systems, we first need to build the shorter parts of the system. And since there are different ways to do this (we could build this part before that, or the other way round, etc.), assembly theory introduces an element of history-dependence in complexity. There is also an element of selection. Some things assemble but fall apart and are gone, whereas other things that assemble remain and multiply, and the copies that are produced do the same, occasionally changing themselves slightly in response to external conditions. This is just like natural selection and adaptation in biology, in other words, evolution [13]. In his theory of evolution Darwin showed how the mechanism of natural selection could give rise to more and more complex life forms from a self-replicating molecule. However, conventionally it is assumed that this first self-replicating molecule arose just by chance [1]. Of course, it is not impossible for such a molecule to emerge just by chance from a pool of billions of molecules over a long period of time. However, it is still very unlikely that it emerged without any external condition that favored the assembly of that particular molecule over other similar possible molecules. Assembly theory might be able to better explain how exactly the first self-replicating molecule emerged.

Note that the element of history in assembly theory is extremely important. If we are looking to quantify the complexity of an object using assembly theory, for instance, in some cases we might want to consider the complete history of the system. For instance, consider synthetic elements, or elements that do not occur naturally, but have been synthesized by humans in the laboratory. Of course, a synthetic element is structurally less complex than a human, and it is much simpler to assemble a synthetic element than to assemble a human. However, humans assembled the synthetic elements. This means synthetic elements would not exist without humans, so in a certain sense, to build a synthetic element, we first have to assemble a human. From this perspective, synthetic elements are more complex than humans [12]. When we say synthetic elements are less complex than humans, we are already making the implicit assumption that humans exist. So, in some cases, by “history”, we refer to the entire history of the object’s assembly, starting from scratch, without assuming the existence of any other complex system. The history of assembly of a system is important because only by studying this history can we hope to explain every aspect of the system. Theoretically, there are a lot of possibilities, but due to some historical accident, some of these possibilities do not occur and others do, affecting greatly how the final assembled system turns out to be. For example, Newton’s laws can explain the motion of the planets around the Sun, but not why the planets of the Solar System move in the same direction [14]. This is because the Solar System condensed out of a rotating disc of gas, which is merely a historical accident. Such accidents are not inevitable, but neither can they be "explained" in any way. If two masses approach a star from opposite directions, due to the gravitational field of the star, they might start orbiting the star. In this case, since they arrived from opposite directions, they will obviously orbit the star in opposite directions. This is also a perfectly valid scenario. But in the case of the Solar System, since it originated from a rotating disc of gas, all the masses were already moving in the same direction and they continued doing so. So, in short, we can conclude that to study the complexity of a system, we need to study the evolution of the system. The term “evolution” captures the assembly history of the system, the selections that shaped the assembly process and the way the system responds to feedback and improves itself (adaptation). All of this information cannot be gathered just from the structure of the system, we need to understand how the system came to be and how its structure changes over time.

Assembly theory addresses the question of how complex systems the information about the design of which is not present within the known laws of physics arise in Nature. The approach taken by this theory to investigate this question is novel: assembly theory does not seek to redefine the laws of physics, but instead it redefines the concept of an object that these laws operate on [15]. Instead of treating objects as definite particles (or groups of particles) just the present properties of which are described by the laws of physics, here we define objects by taking into account all the possible ways they could be formed. All of this information is contained in the assembly of an object, which is a quantity that depends on the copy number (the number of copies of the object) and the assembly index (the shortest path to assemble the object) of the object [15]. Note that both of these are well-defined quantities in assembly theory. Using this information, assembly theory explains the way complex systems are assembled in Nature as well as why certain complex systems are observed in large numbers in Nature while others are not, among other things. In assembly theory, an object must be finite, distinguishable, and persist over an appreciable amount of time, and we can, in principle, break the object so that we can determine the constraints to construct it from elementary building blocks [15]. Here, instead of making the usual assumption that the building blocks of Nature (elementary particles) are fundamental and unbreakable, we treat objects, in general, as “anything that can be built” [15]. The next important assumption on which assembly theory is based is that if a complex object exists in Nature in large numbers, it invariably indicates the presence of some mechanism that generates the object (selection). This is obvious since it is extremely unlikely that the same complex object will, by itself, exist in large numbers in Nature, without some mechanism already in place that is conducive to its assembly. Objects formed from building blocks via an undirected pathway are likely to have a low copy number, whereas objects formed via directed pathways (which implies selection) are likely to have a higher copy number.

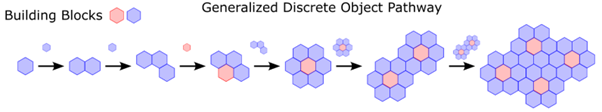

Figure 1: It is unlikely that a huge number of identical objects assembled randomly via undirected pathways will exist in Nature. However, if there is selection, the copy number of the object will obviously be higher. In this figure, we see that the path P2 ultimately merges with path P1, and path P1 leads to the formation of the complex object Q via path P3. Thus, both P1 and P2 lead to the formation of the object Q, thus increasing the chances of the object Q being formed. Since there is a selection mechanism that supports the formation of Q, it has a high copy number. [Source: https://www.nature.com/articles/s41586-023-06600-9]

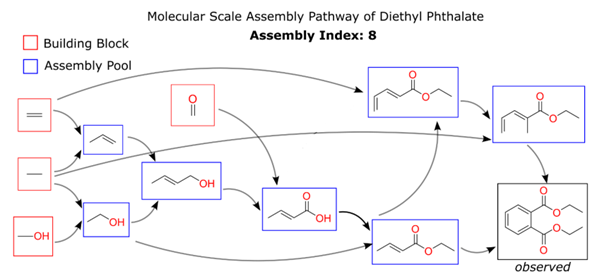

To find the assembly index of an object, we look at how the object is assembled. We start with the basic building blocks of the object, and join them in different ways to form new structures. These intermediate structures are added to the group of objects (assembly pool) that are available for subsequent steps in the assembly process. This is important in order to determine the minimum number of constraints to build the object. The assembly index of the object is associated with the shortest assembly pathway that can generate the object in its entirety.

Figure 2: We start with two building blocks, and use these and some intermediate structures built at some step during the process to assemble the final object. [Source: https://www.nature.com/articles/s41586-023-06600-9]

Figure 3: The final observed object (a diethyl phthalate molecule) has been assembled in eight steps, where the formation of a new structure from two former structures is a single step. Thus, the assembly index of this molecule is eight. [Source: https://www.nature.com/articles/s41586-023-06600-9]

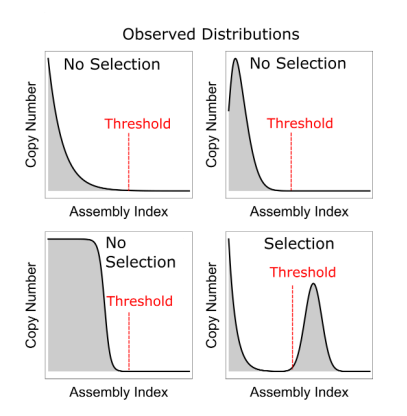

As stated previously, the assembly of an object depends on its assembly index and copy number. Both these quantities are also related, of course. In the absence of any selection mechanism, the copy number of an object decreases rapidly with increasing assembly index. A high assembly index indicates that the assembly pathway of the object is long, and thus the object is complex. So, obviously, we should not expect to find many copies of this object in Nature, since there is no selection mechanism involved in the assembly of this object. In the presence of a selection mechanism, however, we can get a high copy number even at a high assembly index.

Figure 4: Observed copy number distributions of objects at different assembly indices, in the presence as well as absence of selection. [Source: https://www.nature.com/articles/s41586-023-06600-9]

Conventionally, we apply the laws of physics to different objects in the physical world and explain the observed phenomena by assuming specific initial conditions. But it is highly unlikely that all the conditions needed for complex systems like humans to emerge in this universe were somehow included in the initial conditions of the universe (the Big Bang). Assembly theory beautifully gets around this problem. Assembly theory does not demand that all the necessary information be encoded in the initial conditions [1]. It arises as the system evolves, and this is why the history of the system (how it was assembled) plays a very important role in assembly theory. The approach taken by assembly theory is unique compared to other conventional theories that attempt to study the origin and evolution of complex structures. We first start with all possible permutations (or arrangements) of the basic building blocks. Then we filter out the permutations that are prohibited by the laws of physics. Now even among the remaining permutations at this level, some more are filtered out. For example, permutations that result due to a very fast chemical reaction are more likely to be observed in Nature than those arising from a chemical reaction with a very slow reaction rate [1]. In this way, assembly theory explains why we observe certain complex structures in Nature and not other structures, even though some of the latter structures may be perfectly allowed by the laws of physics.

Another interesting and relevant theory worth mentioning is constructor theory [1, 16]. Constructor theory studies which kinds of transformations are possible and which are not. As Marletto explains [16], constructor theory goes beyond what is, and studies what could be (or could not be). Think of it this way. That an airplane could crash is always true for any flight, regardless of whether the airplane actually crashes or not. And it is important to consider this property because if a flying machine has the property that it can never crash, it does not qualify as an airplane, since we know the laws of physics perfectly allows airplanes to crash if the required conditions are met. We have not yet been able to build an airplane that we can confidently say will never crash. In constructor theory, we consider what is possible and what is not, according to the laws of physics. So far in physics, we have mostly developed our theories under the implicit assumption that once we have specified everything that exists in the physical world and everything that happens in the physical world, we have explained all that there is to explain [16]. But for a complete description of the physical world, we also need to consider what else could have existed and what else could have happened to the existing objects if, say, the physical world evolved in a slightly different manner (without violating the laws of physics, of course). Coming back to airplanes, if you don't consider the possibility that an airplane might have to make an emergency landing on water, you cannot explain why life vests are present under every seat of the airplane. Even if the airplane does not actually end up in the water, the reason for the presence of the life vests would still not change. Constructor theory is a fundamental and promising theory that, coupled with assembly theory, will probably be able to provide a proper understanding about the origin and evolution of the complex structures that we see in Nature.

Assembly theory essentially studies the evolution of the system, which is an extremely important factor to consider when determining its complexity. Now, we will discuss another important concept related to complexity: emergence [17].

Emergent properties in complex systems

In a complex system, even a thorough knowledge of the constituent parts may not be enough to reveal how the whole system affects an individual part, and how they interact with one another. But we need to know this in order to understand the workings of the system in its entirety. According to downward causation [18], it is possible for the entire system to create certain constraints on its parts and cause them to behave in a way that cannot be explained just by the knowledge of the parts. Downward causation is, in some sense, the opposite of reductionism. According to reductionism, which is roughly the opposite of emergence, a system, regardless of its level of complexity, can be explained by breaking it into smaller and smaller pieces and studying the parts it is made of. In general, if we have a thorough knowledge of the parts, which form the basis of higher and more complex systems, we can figure out how these parts affect the behavior of the higher systems. In other words, there exists no property of the higher systems that cannot be explained, at least in principle, just using the knowledge of its parts. It is, of course, true that the system, as a whole, cannot be independent of its parts. The parts put constraints on the whole to a certain extent (upward causation). But at the same time, the whole also puts some constraints on the parts (downward causation). There is no general consensus on whether downward causation is possible. One of the best examples of downward causation in Nature is snowflakes, or more precisely, snow crystals [18]. All untampered snow crystals, by themselves, have a six-fold symmetry, meaning that if we rotate a snow crystal by 60 degrees, its appearance will be unaffected. Snow crystals are formed due to solidification of water, and we can understand the exact reason for them having a six-fold symmetry by studying the chemistry of water molecules. But that is not important for our purposes here. So, although snow crystals always have six-fold symmetry, there are many possible structural forms of snow crystals. The exact structure of a snow crystal depends on a lot of factors, including environmental factors. However, there is a constraint that whatever be the final structure, it must have six-fold symmetry. In other words, once a structure has been formed (the whole), the molecules (the parts) in the crystal are allowed to be present in only certain particular arrangements for which there is a six-fold symmetry. The whole, thus, puts constraints on the parts, demonstrating downward causation.

Figure 5: Different shapes in which snowflakes appear in Nature. [Source: https://sciencenotes.org]

In the context of emergence, downward causation demonstrates why a knowledge of the parts of a complex system might not suffice to explain every feature of the system as whole. When many simple parts are interacting in a complex manner, emergence simply refers to the emergence of new properties of the system as a whole, which cannot be explained by studying the individual parts, or which do not arise from the properties of the individual parts. A simple example of emergence is wetness. The atoms or molecules that make up water are not individually wet, rather wetness emerges as a property of water as a whole. In complex systems, we cannot predict or explain all properties of the system just by studying the parts it is made of. The arrangement of, and the interaction between the parts, also play an important role. In fact, this might be why consciousness cannot be understood just by studying a single neuron. We need to study the whole brain, which is an extremely complex system. So, the point of interest is that it is not possible to completely describe a complex system just by studying the parts it is made of. Now, whether this is due to our current shortcomings or whether it is fundamentally impossible is the next important question. And this question gives rise to two kinds of emergence: weak emergence and strong emergence [19]. Weak emergence is not really emergence at all. According to weak emergence, it is possible, in principle, to completely describe a complex system just with the knowledge of its parts. However, we have not been able to achieve this feat due to the fact that the system we are studying is probably much more complex and sophisticated for our current calculational and computational power to handle. We cannot be entirely sure that with a supercomputer of almost infinite computational power, we cannot simulate a complex system just with the knowledge of its parts. However, it is not possible today, and seemingly, not anytime soon. Simulating the behavior of trillions of particles is no easy feat, even for today’s most powerful supercomputers. The point is, according to weak emergence, this is just a matter of our limitations. We do not need fundamentally new kinds of laws to explain complex systems. Or in other words, the same basic principles apply at all scales - from the most simple to the most complex. For our ease of understanding, we may formulate “new” laws of complexity that describe complex systems. However, these are not fundamentally new kinds of laws. In other words, these laws are, at least in principle, reducible to those basic laws. On the other hand, strong emergence claims that we need fundamentally new kinds of laws to study complex systems. Complex systems represent new and independent laws of physics [20]. In a universe governed by strong emergence, it is impossible, even in principle, to fully describe a complex system just by studying its parts. It must be noted that weak and strong emergence do not disprove each other. Some complex systems may be weakly emergent, while others strongly emergent. Strong emergence indicates that the complexity of the system has increased abruptly by a huge amount, which might occur when the number of possible combinations of the simpler building blocks comprising a complex system increases too much. In such cases, the system might reorganize itself into an entirely different structure and there may be an entirely new set of laws that apply to this new system [21]. However, at the same time, it must be stressed that strong emergence demands a radical change in our worldview, and since we do not understand the new laws yet, strong emergence is, in a certain sense, useless and with low predictive power. We should resort to such an explanation only if we are absolutely certain that weak emergence cannot explain the workings of the complex system we are studying. There may be no need for any fundamentally new set of laws to study complex systems. But of course, since it is not practically possible for us to understand complex systems just by studying the parts, we should try to find some laws (not necessarily fundamentally new laws) which make the study of complex systems easier. Anyway, what is certain is that further research on emergence is crucial to better understand complexity. Currently, all that we surely know about the relation between emergence and complexity is that more the number of emergent properties in a system, the more is the complexity of the system.

The edge of chaos

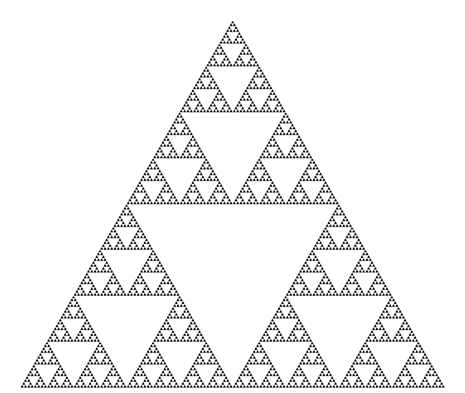

Another defining feature of complexity is that it thrives at the edge of chaos. Whenever we start thinking seriously about the fundamental nature of reality, the first question most of us ask ourselves is this: Why is there something rather than nothing? [22] That would have been the most simple: nothing exists: no matter, no consciousness, no laws (order). But it is not possible that nothing exists. Something must exist. We are here asking these questions. So, what is this something? Is our universe the most complex and unpredictable system possible (chaos)? No. The most complex and unpredictable system is complete chaos. But in general it should be noted that although chaos implies unpredictability, since a small change in the initial conditions of a chaotic system will have drastic consequences on the future behavior of the system, making it unpredictable (butterfly effect or sensitivity toward initial conditions), it is still possible to formulate certain laws that apply to these systems (here, we are not talking about complete chaos). Although the exact behavior of these chaotic systems is unpredictable at a particular instant of time, they follow certain universal laws over a long period of time. Let us illustrate this with an example. Let us choose three non-collinear points (say, A, B and C) on a plane, such that they form an equilateral triangle. A random starting point (say, P) is chosen anywhere on the plane. The game proceeds by following certain simple rules. A die is rolled. If the outcome is 1 or 2, the point halfway between the points P and A, is marked. Similarly, if the outcome is 3 or 4, the midpoint of the line segment joining the points P and B is marked. For outcomes 5 or 6, the midpoint of the line segment joining the points P and C is marked. For subsequent steps, the midpoint of the line segment joining the point last obtained, with A, B or C (depending on the outcome of the die), is marked. If we continue doing this for a long time (a large number of iterations), the collection of all the marked points on the plane resembles a beautiful fractal called the Sierpiński triangle [23]. This process of generating the Sierpiński triangle is not sensitive to initial conditions, although obviously there is an element of randomness in the process, owing to the fact that we start off with a random point on the plane and we proceed based on the outcomes we get after rolling a die, which is completely random. No matter wherever we choose the starting point to be, and no matter the sequence of the outcomes we get after rolling the die, we always get back the same pattern, provided we follow the rules mentioned. Note that the exact locations of the points at an intermediate stage cannot be predicted with certainty, since it depends on the outcome we get after rolling a die. However, after a large number of iterations, as a whole we always get a definite pattern. Thus, a certain amount of randomness in the process does not always mean that the outcome will also be random.

Figure 6: The Sierpiński triangle. [Source: https://commons.wikimedia.org]

Coming back to our discussion on complexity and chaos, as the complexity of a system increases more and more, it moves closer and closer to complete chaos. And when it is closest to chaos - the edge of chaos - but has not become fully chaotic, it is the most complex [12, 24]. At this stage, the system is not fully unpredictable, but there exists a lot of variety in the system, making it more complex. More variety means we need more information to completely describe or assemble the system, and thus it is more complex. Now, we used the phrase “complete chaos” previously; by “complete chaos,” we mean a system that is completely chaotic. In other words, there can be no laws that apply to such forms of chaos, making it impossible to have a measure of complexity of the same. But the world around us is clearly not completely chaotic; because even amidst the randomness, there is some order. So, we are neither in the most orderly system (nothingness), nor in the most disorderly system (complete chaos). We are somewhere in between the most simple and the most chaotic forms of existence. Nature seeks balance. It is usually not this extreme or that extreme, the answer can almost always be found somewhere in the middle. As an analogy, take music. Music is a complex form of sound, and sophisticated music has a subtle balance between predictability and unpredictability. It is the unpredictability, for instance, that makes the drop the best part of a song, but completely unpredictable random noise is not music. And a completely predictable, simple, periodic sound is not good music either. Music is at its best at the edge of unpredictability: assuming that we are listening to it for the first time, we cannot exactly predict the music, although once we get a rough idea of the melody, it is not totally unpredictable either. Music thrives somewhere between complete predictability and complete unpredictability. In the same way, complexity thrives somewhere between order and chaos: complexity happens at the edge of chaos. For both the extremes, nothingness and complex chaos, complexity is the minimum, because in both these cases, the information needed to describe the system is the minimum. In the first case (nothingness), there is nothing to describe. And complete chaos implies that the system we are trying to describe is exactly the same in all respects. In other words, it is completely random, and we do not need any additional information to describe any “asymmetry” in the system. Thus, ignoring the physical description of the constituents (particles or whatever) of the system, both nothingness and complete chaos are informationally equivalent at a fundamental level.

So far we have identified three defining features that are common to most complex systems: evolution, emergence and the edge of chaos (all three, coincidentally, starting with an “e”). However, we do not know exactly how the complexity of a system depends on these features. Now, let us talk about the presence of competing effects in complex systems.

Competing effects in complex systems

In almost all complex systems, we can find competing effects. For instance, the edge of chaos is a balance between two competing effects: order and chaos. In any complex system, the intricate dance between the competing effects is a crucial factor to consider when determining its complexity. Complexity arises when, roughly speaking, there are competing effects. For instance, gravity trying to crush everything down, and the expansion of the universe trying to blow everything apart [25]. The presence of these competing effects actually lies at the root of the formation of complex structures, like galaxies, in the universe. Another set of competing features often seen in complex systems is autonomy and interaction. All complex systems have some degree of autonomy; in other words, they are distinguishable as independent systems. While they may interact with their surroundings, their behavior is not fully determined by the surroundings. If that was indeed the case, then the behavior of the system would be completely random and unpredictable. The behavior of the system would change if we moved it to a different environment. But we do not see actual complex systems in Nature behaving this way. There are at least a few basic properties of the system that do not change with time. However, the system is obviously not totally isolated from the surroundings. While it has some degree of autonomy, it also interacts with its surroundings in many different ways, and this interaction does affect its behavior to some extent. If the system did not interact with its surroundings at all, we could not have studied or even perceived the system. While interaction ensures that the system is subjected to a variety of external conditions, autonomy ensures that the system has certain properties that remain constant over an appreciable period of time. And together, they contribute to the overall complexity of the system.

Self-organization and emergence

Self-organization, which is closely related to emergence, is a property of a complex system that depends on the degree of the autonomy and interaction of the system. The degree of self-organization in a complex system depends heavily on the system-surrounding boundary [21]. Self-organization, as the name suggests, is the process in which complex systems can organize themselves into even more complex structures and maintain that structure without any external control [26]. Thus, self-organization is often viewed as the reason behind the existence of overwhelming complexity in Nature. Emergence, on the other hand, can be thought of as the reason for sudden jumps in complexity [21], although this is not actually true.

There are certain constraints a system must satisfy to be able to exhibit self-organization and emergence. First, the system should have a large number of small and simple elements, and not a small number of large elements. Next, the system must be an open system. Self-organization increases the order in the system, because the system is growing more organized. However, from the second law of thermodynamics, which is a fundamental law of physics, we know that over time, isolated systems become more disordered (their entropy increases) [27]. This is precisely why an isolated system cannot exhibit self-organization. In order to do that, the system must be an open system, meaning it can exchange matter and energy with its surroundings, which makes self-organization possible. It is perfectly possible for an open system to transfer its entropy to the surroundings, thus reducing its entropy. However, note that the total entropy of this system along with its surroundings does not decrease, in accordance with the second law of thermodynamics.

Ordered complexity from randomness

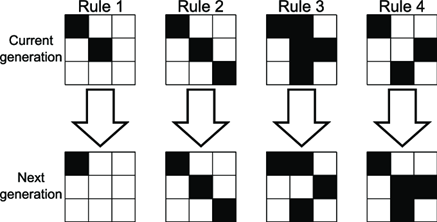

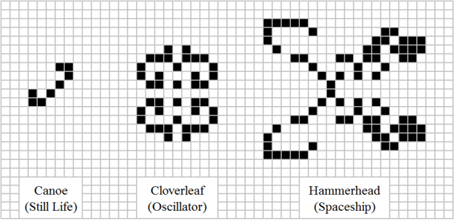

We discussed two sets of competing effects in complex systems: gravity/expansion of the universe and autonomy/interaction. A third set is rules/randomness. There are many complex systems in Nature that demonstrate an element of randomness but at the same time follow certain rules. It should be noted that these are local rules, implying that there is local or decentralized control. The rules operate at the local level and yet affect the system at the global level [26]. There is no central control in the system; although we can control the parts, we cannot directly control the whole. And although there is an element of randomness built into these rules, they are perfectly capable of giving rise to a complex system that demonstrates a remarkable degree of order and organization. In other words, ordered complexity is a fortunate product of random processes. This simply means that everything happened spontaneously and not because of external factors. The complex and ordered structures that we see in Nature, such as living organisms, are the result of seemingly random processes, such as mutations, natural selection, and environmental fluctuations. As of now, it seems there is no conscious design behind the emergence of complexity, rather it is a lucky outcome of randomness. However, this does not necessarily mean that the universe is inherently random. It is, in fact, perfectly possible that deep down the universe is perfectly deterministic, and that the apparent randomness is indicative of our limitations and/or incomplete knowledge. The universe being deterministic does not necessarily contradict the claim that there is some randomness in the universe, because even randomness can be deterministic in a certain sense. There are laws that apply to random systems; random does not mean it cannot be studied or understood. “Deterministic” simply means that the universe functions according to some universal laws, although of course, there is some randomness in the universe as well. So we have two competing effects: rules and randomness, and they give rise to ordered complexity. A classic illustration of this is the Sierpiński triangle [23], it is generated according to some simple rules, but there is some randomness built in the rules (as we have seen, we start with a random point and the subsequent steps depend on the outcomes we get after rolling a die, which is random). And after a huge number of iterations based on these rules, the same fractal pattern - the Sierpiński triangle - is always generated. So the process is not sensitive to initial conditions, the outcome is fully deterministic, even though there is an element of randomness in the process. One more illustration that supports the claim that ordered complexity can emerge from a set of simple rules and some randomness is cellular automata [28]. Let’s consider the cellular automaton called the game of life [29]. This game is played on a grid consisting of black and white cells, where each cell is either alive (say white) or dead (black). And each cell can be surrounded by a maximum of eight cells (neighbor cells). There are four rules of this game: alive cells with no or one alive neighbor die (solitude), alive cells with more than three alive neighbors also die (overpopulation), alive cells with two or three alive neighbors survive and dead cells with exactly three alive neighbors become alive (reproduction).

Figure 7: The rules of Conway’s game of life. [Source: https://doi.org/10.1016/j.ssci.2019.104556]

If we play the game by following these simple rules, depending on the choice of the initial configuration of the dead and alive cells, which is random, we get different interesting complex patterns, sometimes which can even self-replicate.

Figure 8: Three different patterns formed by the game of life. [Source: https://doi.org/10.18178/ijeee.4.5.374-385]

Yet another example is that of random Boolean networks, which have been used to explain self-organization in complex systems [30, 31, 32].

A discussion on consciousness

Consciousness is undeniably one of the most perplexing known phenomena. Why are we conscious? How can feelings, emotions and sentience emerge from ordinary physical matter? Do they emerge from physical matter after all? The trouble with consciousness is that it will perhaps always be impossible to understand it completely. This is simply because we can attempt to understand consciousness only by using our own consciousness; we don’t know if or how we can study consciousness from a third person perspective. Put in other words, even if consciousness was simple enough to be understood, we would not be smart enough to understand it.

Although we have a rough understanding of how life emerged from nonliving matter in the early Earth (abiogenesis [33, 34]), we don’t yet have a good and unique picture of how the sense of sense (qualia) emerges from the neural circuitry of the brain. We know how simple molecules in the early Earth self-assembled themselves spontaneously and formed complex molecules which replicated themselves and how lipids, proteins and nucleic acids played an important role in the formation of the first living cell. The defining feature of a cell is its boundary; it has an inner structure maintained and shielded from the outside world by a membrane (compartmentalization). And the cell must also take in and use energy from its surroundings (metabolism). And of course, these cells must be capable of creating copies of themselves. In the early Earth, water, carbon dioxide, methane and ammonia were present. If we take these same elements in a laboratory and imitate the early Earth conditions, like ultraviolet rays (sunlight) and electricity (lightning), we will get a primordial soup which contains some molecules that are more complex than the ones we initially started with [35]. Over time, many complex molecules (even the unlikely ones) will form. Some of these molecules will create copies of themselves. Not exact copies. Soon this soup will be full of different varieties of molecules, and Darwin's theory of evolution and natural selection [36] can probably explain how, gradually, this resulted into more and more complex organisms and finally humans. Darwin’s theory successfully demonstrated how spontaneous processes in Nature can give rise to order and complexity and produce the illusion of design. Darwinian evolution is based on natural selection. Evolution is the spontaneous process by virtue of which a species adapts to the changes around it, in order to increase its chances of survival. According to genetics, the process of reproduction does not produce exact copies, but there can be slight genetic variations in the offspring. Some of these variations will help the offspring to survive better, while others not. According to Darwin, Nature "selects" those offspring that have better chances of survival, and the variations that give them better chances of survival are passed on to the following generations. This selection is not a conscious process. Let us consider an example. Suppose there live some red insects in a tree. A certain type of bird eats these insects. Due to a genetic variation, suppose one insect is born green, and another is born blue. Since the leaves of the tree are green, it is difficult for the birds to spot the green insects. The blue color provides no evolutionary advantage. This means, the birds will eat up the red and blue insects, and soon just the green insects will be remaining, whose offspring will also be green. Thus, we see that a colony of red insects has evolved into a colony of green insects. Nature does not consciously select the green insects, rather it happens spontaneously because the birds will eat up the red and blue insects. So, there is no purpose of evolution. It is a spontaneous process. And the fact that evolution has no specific purpose is a good thing. It gives a lot of flexibility and adaptability to the process [37]. Machines created for a particular purpose only serve that purpose. The same cannot be said of living creatures that have evolved spontaneously over the years. This is why we human beings, currently the maximally-evolved species on Earth, are so good at adapting to various environments. We are not hardwired or genetically preprogrammed to suit one particular environment. A newborn human is pitiable, learning everything from the beginning. Other than thirst, hunger and such instincts, we are born livewired. Most lower animals are not. A newborn polar bear can readily adapt to the cold, on birth. But the disadvantage of being hardwired is that we cannot adjust the adaptation. Bring the polar bear in hot weather and it will die. Being flexible, we can however, adapt more or less everywhere, from Antarctica to Sahara [38].

But beyond the properties of a living organism, none of this explains how the quality of being aware of our existence emerges. It could be that the key to understanding this lies in studying both the arrangement of and the interaction between the neurons in our brains. Note that both the arrangement of and the interaction between the parts must be present for consciousness to emerge. Think of it this way. Only a particular arrangement of simple parts can give rise to an emergent pattern. A random collection of dots arranged in a particular way might remind us of an airplane, for instance. Basically, we perceive this pattern as something resembling an airplane. We are capable of perceiving thanks to the fact that the neurons in our brains maintain an arrangement that allows perception. But when we are perceiving this particular pattern as something resembling an airplane, our subjective experience is changing, the pattern is not. And for our subjective experience to change, evolve, modify itself over time, in short, for us to be conscious, there must be interaction between the different parts of our brain as well. And interaction must be in the correct manner such that it does not change the overall arrangement and interaction in a way that it can no longer give rise to conscious experience. A point to be noted here is that conscious systems have evolved over a long period of time, and they have self-organizing characteristics. Simply put, conscious systems are not fragile, and it is not easy to break the pattern so that the system suddenly stops being conscious just on account of a spontaneous change in the arrangement of and interaction between its parts. Here, we are not talking about natural death due to aging or fatal physical injuries that forcefully interfere with normal brain activity and the functioning of other vital organs of the body. So anyway, our consciousness is likely a result of the arrangement of as well as interaction between the neurons in our brain. Billions of the nerve cells called neurons are behind everything we think and do. Billions of neurons are connected by synapses in our brain. Special chemicals called neurotransmitters dart between the synapses and give rise to our conscious experience. Synaptic connections form and deform – and we form and forget memories; synaptic connections strengthen as we practice an activity – and we master it to carry it out unconsciously; neural activity becomes rhythmic – and we fall asleep. In fact, most of the time, we are unconscious, blissfully ignorant of the fact. If we were fully conscious of what we were doing, we would take a minute to react to a hot stimulus – and of course, that is not desirable. So, consciousness is an integrated whole, it cannot be understood by simply studying a neuron. Being an integrated whole is a condition that a system needs to satisfy to be conscious, according to Tononi's integrated information theory [39, 40]. There is one more condition. The system must be capable of processing, storing and recalling information. For consciousness to emerge, the information processing needs to be integrated. Conscious systems must be integrated into a unified whole. If a conscious system consisted of two independent parts, they would feel like two separate conscious entities rather than one, and if a conscious part is unable to communicate with the rest of the system (here, with the other conscious part, which we have assumed is independent of this part), then the rest of the system cannot, in any way, contribute to the subjective experience of the original conscious part [41]. We can safely claim that if the parts constituting the complex system are not close enough, they would not be able to work together and make consciousness emerge. It should be noted that the physical distance between the constituent parts need not be a crucial factor, as long as there are efficient ways of quick information transmission between the parts. The main thing is that there should be a dependence between the different parts of the system, one part can affect the other part and vice versa. This is what Giulio means by integrated. Although completely independent parts can maintain a specific arrangement relative to one another, they cannot affect one another, they cannot give rise to any interaction. Building on Tononi, Tegmark proposed that consciousness could be just a new state of matter [41, 42]. In this state of matter, atoms are arranged in a special manner so that they process information. Simply put, consciousness could be the way information feels when being processed in certain complex ways. Because of this, Tegmark calls consciousness “doubly substrate-independent”, because in this view, consciousness is not even the way we feel, it is the way information feels. In short, the arrangement and interaction in the brain gives rise to information, but consciousness has not yet emerged at this level. If we go one step further and consider the behavior of this information, we will find that the property of conscious experience emerges from the way information feels when being processed. Information itself is independent of the physical matter of the brain (the substrate), and the way information feels is doubly independent of the substrate. It is crucial to understand that information, and in turn consciousness, is not independent of the brain. They are independent of the physical matter of the brain, or the substrate. However, they depend on the physical structure and activity of the brain (arrangement and interaction). Consciousness is in the pattern, not in the physical substance. This might also mean that it is possible, in theory, to simulate consciousness if we can recreate the required pattern in the parts of any sufficiently complex system, not necessarily the human brain [43]. Thus, we can conclude that consciousness probably emerges, directly or indirectly, from a particular arrangement of and interaction between physical matter, which gives rise to information that is processed. We have already discussed emergence. In the context of the brain and consciousness, we know that each neuron is individually simple and carries out relatively simple tasks. However, the system the neurons have created together is way more complex. We cannot just sum up each neuron. If we do, we will not get consciousness [38]. That needs something else. This something might arise from the arrangement of and the interaction between the parts of the system. Now, how sure are we that consciousness is an emergent phenomenon? The answer is not, of course, 100%. However, we do have strong reasons to believe consciousness is emergent from (and heavily dependent on) the physical structure of the brain. A slight change in a single neural circuitry of our brain can fundamentally change our behavior, even identity.

We discussed two kinds of emergence: weak and strong. Now, is consciousness weakly emergent or strongly emergent? Since consciousness is fundamentally different from matter and most of the physical world, it may be the case that consciousness is strongly emergent, meaning we need fundamentally new laws to study consciousness. However, this does not rule out the possibility of consciousness being weakly emergent. A very long time back, we thought of fire as something completely different from, say, wood. Liquid water is a new type of substance, and is different from ice. At that point of time, it would have seemed that fundamentally new kinds of laws are required to understand fire, which would be entirely different from the laws governing the behavior of wood. And the same goes for liquid water and ice. But today, we know that all of them are made of atoms, which are composed of the same fundamental particles: quarks and electrons. These particles are arranged differently and behave differently in different substances, due to which fire is different from wood, or water from ice. But fundamentally, they are all made of the same kind of particles. So, although consciousness is extremely complex and sophisticated, there is no evidence on the basis of which we can say that we need fundamentally new kinds of laws to explain consciousness. It is perfectly possible that the complexity of consciousness emerges from many simple parts interacting in a very complicated manner, in which case consciousness would have a strong dependence on the parts it is made of. The only possible objection one might have to the above argument is that fire, wood, ice and water are all physical objects that we can examine from a third-person perspective, while consciousness is something completely different. It is a qualitative property, and as already outlined above, we are dependent on our consciousness for all the conclusions we draw, so we cannot examine consciousness from a third person perspective, unless we are able to artificially simulate consciousness. Even for the latter, we first must ensure that the consciousness we simulate is exactly identical to human consciousness. Otherwise, the conclusions we draw from studying this simulated consciousness would not be applicable to human consciousness. Anyway, in response to the objective mentioned above, it might be a pure coincidence that a particular arrangement of matter gives rise to sentience. Consciousness is different, and by coincidence, it happens to have the unique ability to comprehend its own source if a particular arrangement and interaction of matter is achieved. But that does not change the fact that consciousness may still be subject to the known laws of physics. Following this line of argument even further, it may be the case that there's no feeling that cannot be generated, at least in theory, by manipulating the levels of different chemicals in the brain. Of course, the brain is affected by a lot of factors (environmental, genetic etc.). But the point is, all these factors change the physical structure of the brain in some subtle way or the other [37, 38]. We know it's not always possible to pin down these subtle changes, and often it is not a single change. So when we say there's no feeling that cannot be generated by manipulating the levels of different chemicals in the brain, we don't mean to say that we can easily recreate all feelings artificially. We don’t have the computing power to do that, and it does not appear that we will achieve such levels of computing power anytime soon, given it is possible to simulate consciousness computationally at all. But it might be possible, at least in theory, to understand every aspect of consciousness using the known laws (or different forms of these laws). However, of course, the people who claim consciousness to be strongly emergent have a valid argument that cannot be refuted. As we discussed, they claim that consciousness is so different from ordinary matter that we don’t even know the right questions to ask. It is not simply a question of computing power; we don’t know what to compute [44].

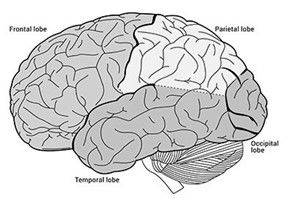

So assuming consciousness is emergent from the physical structure of the brain, an important question now is whether specific properties of consciousness can be linked to specific areas or processes of the brain. To a limited extent, yes. The brain can be divided into four lobes. The frontal lobe processes our rational thoughts. It homes the prefrontal cortex. Damage to it results in loss of ability to plan, contemplate the future etc. The right part of the parietal lobe controls sensory attention, body image, etc. Its left part controls body locomotion (movements). The temporal lobe controls face recognition, language, emotions etc., while the occipital lobe deals with vision. However, it is not exactly correct to define a particular function to a particular region of the brain. The brain depends on large scale coordination and interaction to function.

Figure 9: The four lobes of the human brain [Source: https://commons.wikimedia.org]

There are many other ways to categorize the different regions and processes of the brain. Now, instead of asking what regions of the brain correspond to what properties of consciousness, let us ask a slightly different question. Which specific regions and processes of the brain give rise to conscious experiences in the first place? Technically, these processes are the Neural Correlates of Consciousness (NCCs). However, to better understand consciousness and complex systems, we need to go a step further and look for Physical Correlates of Consciousness (PCCs), in other words, what particular arrangements of the fundamental particles can, directly or indirectly, give rise to consciousness? Note that here we have come down to the level of fundamental particles, and not neurons. The study of the complex anatomy of the brain, even at the level of neurons, could not satisfactorily reveal the secret of consciousness. Of course, neuroscientists have come a long way in making remarkable technological advancements in the fields of medicine, Artificial Intelligence, etc. Researchers have created thought driven wheelchairs to help paralyzed patients [45, 46]. But we are nowhere near creating true Artificial Intelligence yet. Artificial Intelligence models based on neural networks can solve a lot of problems that are difficult and time-consuming to us; but all of these problems can usually be reduced to a well-defined set of rules. These same AIs find it impossible to perform tasks like understanding and appropriately responding to a joke, which seem easy to us. This has led many to speculate whether human consciousness can ever be understood the way we build AIs. The answer could be both yes or no. We don’t know whether the special properties of human consciousness can be fully attributed to its sheer complexity and possibly a different kind of arrangement and interaction of its parts, or whether we need entirely different kinds of methods and insights to study systems as complex as consciousness. As of now, simply and honestly put, we don’t have a clear and convincing explanation of consciousness. The good way to get a rough idea of the way human consciousness works is to build models. But what makes the situation complicated is that human consciousness cannot be modeled by assuming that we seek only those things that are necessary for survival. We go much beyond that. A lot of common human traits cannot be explained or easily derived just from this basic assumption. At this point, another important question confronts us: the question of whether free will exists. Do we humans “choose” to do the stuff that seem unusual from the perspective that we are organisms that only seek to maximize their chances of survival? Do we have the power to make a really conscious choice, or is it that the choices that seem conscious to us are actually not so much in our hands? Well, there is no way to conclusively prove that free will exists, even if it actually does exist. So this is a question which is resistant to scientific analysis. It's not a scientific question. It could be that free will is an emergent property of human consciousness, and that is why it has no satisfactory explanation in conventional science. But it is also possible that there is no free will. After all, we are nothing more than machines. Yes, we are machines of flesh and blood, not machines of metal. But we are made of the same fundamental particles that follow the same laws of physics; so fundamentally that makes no difference. Free will is just a dream of the machine, which probably can be explained by studying the physical state of the machine and the way its parts are arranged and interact. That’s one way to think about it. That is all that can be safely said about free will. There are some illogical arguments regarding free will as well. Some people argue that since quantum mechanics shows that there is an inherent element of randomness in the universe, free will must exist. This argument makes no sense. Although the outcome of a quantum experiment is random and cannot be predicted, it does not mean that we can consciously influence the outcome [47]. Determinism ruled out is not equal to free will verified. And even though the universe is random to a certain extent at the fundamental level, it could be the case that this apparent randomness is indicative of our incomplete knowledge and once we successfully formulate a complete theory of the universe (given that is possible at all), we may find that the universe is perfectly deterministic and ordered, just as Einstein believed. The reason the universe might be deterministic is that even randomness can be deterministic. As we have already discussed, there can be universal laws that apply to random systems. So anyway, free will might be an illusion, and human actions might be determined by the laws of physics and the physical state of the brain. Free will is, of course, a useful and pragmatic concept, but it might not be a fundamental scientific truth. Free will is a product of human perception and cognition, and it’s important because it serves as a basis for morality, responsibility, and agency.

Conclusion

In this article, we looked at complex systems and the defining features of complexity. We discussed, with illustrations, assembly theory and the evolution of complex systems, emergence and self-organization and the presence of competing effects in complex systems, among other things. We illustrated why even a thorough knowledge of the fundamental constituents of a complex system and how they behave - in other words, the knowledge that comes from conventional science - is not sufficient to study every aspect of a complex system. To account for these “new” aspects of the system, we introduced the concept of emergence, which takes care of the arrangement of the constituent parts of the system and the way they interact with one another. The evolution of the system is explained by a recent and promising theory - assembly theory. Another important feature of complex systems is the presence of competing effects inside them; the delicate balance between these effects is a crucial factor affecting the complexity of the system. In the end, we also discussed, in some detail, various aspects of consciousness. It is clear that conventional science clearly falls short of explaining the behavior of complex systems around us, thus demonstrating the importance of studying complex systems. Even though we may never completely understand every aspect of the universe, it is undeniably true that the relatively new field of study of complex systems has the potential to explain many perplexing phenomena in Nature as well as pave the way for revolutionary new technologies across various disciplines.

References

[1] Ball, Philip. (2023). A New Idea for How to Assemble Life. Quanta Magazine. https://www.quantamagazine.org/a-new-theory-for-the-assembly-of-life-in-the-universe-20230504/

[2] Jones, Andrew Zimmerman. (2023, April). Albert Einstein: What Is Unified Field Theory? https://www.thoughtco.com/what-is-unified-field-theory-2699364

[3] Casti, J. L. (2023, August). Complexity. Encyclopedia Britannica. https://www.britannica.com/science/complexity-scientific-theory

[4] Sutton, C. (2020, February 28). Unified field theory. Encyclopedia Britannica. https://www.britannica.com/science/unified-field-theory

[5] Goenner, H. F. (2004). On the History of Unified Field Theories. Living reviews in relativity, 7(1), 2. https://doi.org/10.12942/lrr-2004-2

[6] Woord, C and Stein, V. (2023). What is string theory? Space.com. https://www.space.com/17594-string-theory.html

[7] Greene, Brian. (2015). Why String Theory Still Offers Hope We Can Unify Physics. Smithsonian Magazine. https://www.smithsonianmag.com/science-nature/string-theory-about-unravel-180953637/

[8] Rovelli, Carlo (1998). Loop Quantum Gravity. Living reviews in relativity, 1(1), 1. https://doi.org/10.12942/lrr-1998-1

[9] Bianconi, Ginestra et al. (2023). Complex systems in the spotlight: next steps after the 2021 Nobel Prize in Physics. J. Phys. Complex. 4 010201. https://iopscience.iop.org/article/10.1088/2632-072X/ac7f75

[10] Standish, Russell. (2008). Concept and Definition of Complexity. 10.4018/978-1-59904-717-1.ch004. https://www.researchgate.net/publication/1922371_Concept_and_Definition_of_Complexity

[11] Britannica, T. Editors of Encyclopaedia (2023, March). Computational complexity. Encyclopedia Britannica. https://www.britannica.com/topic/computational-complexity

[12] Hossenfelder, Sabine. (2023). The Biggest Gap in Science: Complexity. YouTube - Sabine Hossenfelder. https://youtu.be/KPUZiWMNe-g

[13] Ma’ayan, Avi. (2017). Complex systems biology. Journal of the Royal Society Interface (Volume 14, Issue 134). https://doi.org/10.1098/rsif.2017.0391

[14] Weinberg, Steven. (1994). Dreams of a Final Theory: The Scientist's Search for the Ultimate Laws of Nature. Knopf Doubleday Publishing Group. ISBN: 9780679744085

[15] Sharma, A., Czégel, D., Lachmann, M. et al. (2023). Assembly theory explains and quantifies selection and evolution. Nature 622, 321–328. https://doi.org/10.1038/s41586-023-06600-9

[16] Marletto, Chiara. (2021). The Science of Can and Can't: A Physicist's Journey Through the Land of Counterfactuals. Allen Lane. ISBN: 9780241310946

[17] Pines, David. (2014). Emergence: A unifying theme for 21st century science. Santa Fe Institute - Foundations & Frontiers of Complexity. https://medium.com/sfi-30-foundations-frontiers/emergence-a-unifying-theme-for-21st-century-science-4324ac0f951e/

[18] Heylighen, Francis. (1995). Downward Causation. Principia Cybernetica Web. http://pespmc1.vub.ac.be/DOWNCAUS.html

[19] ’Connor, Timothy. (2021). Emergent Properties. The Stanford Encyclopedia of Philosophy Edward N. Zalta (ed.). https://plato.stanford.edu/archives/win2021/entries/properties-emergent/

[20] Greene, Brian. (2010). The Elegant Universe: Superstrings, Hidden Dimensions, and the Quest for the Ultimate Theory. W. W. Norton & Company. ISBN: 9780393338102

[21] Fromm, J. (2005). Ten Questions about Emergence. arXiv: Adaptation and Self-Organizing Systems. https://arxiv.org/ftp/nlin/papers/0509/0509049.pdf

[22] Sorensen, Roy. (2023). Nothingness. The Stanford Encyclopedia of Philosophy (Spring 2023 Edition), Edward N. Zalta & Uri Nodelman (eds.). https://plato.stanford.edu/archives/spr2023/entries/nothingness/

[23] The Sierpinski Triangle. Mathigon. https://mathigon.org/course/fractals/sierpinski

[24] Baranger, Michel. (2000, April). Chaos, complexity and entropy: a physics talk for non-physicists. New England Complex Systems Institute. https://necsi.edu/chaos-complexity-and-entropy

[25] Carroll, Sean. (2016). The Big Picture: On the Origins of Life, Meaning, and the Universe Itself. Simon and Schuster. ISBN: 9781780746074

[26] Wolf, T and Holvoet, T. (2004). Emergence Versus Self-Organisation: Different Concepts but Promising When Combined. Engineering Self-Organising Systems. 3464. 1-15. https://doi.org/10.1007/11494676_1

[27] Drake, G. W.F. (2024, February 15). Entropy. Encyclopedia Britannica. https://www.britannica.com/science/entropy-physics

[28] Weisstein, Eric W. Cellular Automaton. Wolfram MathWorld. https://mathworld.wolfram.com/CellularAutomaton.html

[29] Cornell Math Explorers' Club. Conway’s Game of Life. Cornell University. https://pi.math.cornell.edu/~lipa/mec/lesson6.html

[30] Kauffman, S. A. (1991). Antichaos and Adaptation. Scientific American, 265(2), 78–85. http://www.jstor.org/stable/24938683

[31] Burian, R. M., and Richardson, R. C. (1990). Form and Order in Evolutionary Biology: Stuart Kauffman’s Transformation of Theoretical Biology. PSA: Proceedings of the Biennial Meeting of the Philosophy of Science Association, 1990, 267–287. http://www.jstor.org/stable/193074

[32] Kauffman, S. (1993). The Origins of Order: Self-organization and Selection in Evolution. Oxford University Press. ISBN: 9780195079517

[33] Rogers, K. (2024, September 24). Abiogenesis. Encyclopedia Britannica. https://www.britannica.com/science/abiogenesis

[34] Pross, A., & Pascal, R. (2013). The origin of life: what we know, what we can know and what we will never know. Open biology, 3(3), 120190. https://doi.org/10.1098/rsob.120190

[35] Parker, E. T., Cleaves, J. H., Burton, A. S., Glavin, D. P., Dworkin, J. P., Zhou, M., Bada, J. L., & Fernández, F. M. (2014). Conducting Miller-Urey experiments. Journal of visualized experiments : JoVE, (83), e51039. https://doi.org/10.3791/51039

[36] Dawkins, Richard. (2010). The Greatest Show on Earth: The Evidence for Evolution. Black Swan Publishing. ISBN: 9780552775243

[37] Eagleman, David. (2012). Incognito: The Secret Lives of the Brain. Vintage. ISBN: 9780307389923

[38] Eagleman, David. (2016). The Brain: The Story of You. Canongate Books. ISBN: 9781782116615

[39] Tononi, G., Boly, M., Massimini, M., & Koch, C. (2016). Integrated information theory: from consciousness to its physical substrate. Nature reviews. Neuroscience, 17(7), 450–461. https://doi.org/10.1038/nrn.2016.44

[40] Tononi, G. (2004). An information integration theory of consciousness. BMC Neurosci 5, 42. https://doi.org/10.1186/1471-2202-5-42

[41] Tegmark, Max. (2017). Life 3.0: Being Human in the Age of Artificial Intelligence. Knopf Doubleday Publishing Group. ISBN: 9781101946596

[42] Tegmark, Max. (2015). Consciousness as a State of Matter. arXiv. https://arxiv.org/pdf/1401.1219

[43] Rescorla, Michael. (2020). The Computational Theory of Mind. The Stanford Encyclopedia of Philosophy. https://plato.stanford.edu/archives/fall2020/entries/computational-mind

[44] Ellis, George. (2020). Emergence in Solid State Physics and Biology. https://arxiv.org/pdf/2004.13591

[45] UT News. (2022). Brain-Powered Wheelchair Shows Real-World Promise. The University of Texas at Austin. https://news.utexas.edu/2022/11/18/brain-powered-wheelchair-shows-real-world-promise/

[46] Tonin, Luca et al. (2022). Learning to control a BMI-driven wheelchair for people with severe tetraplegia. iScience, Volume 25, Issue 12, 105418. https://www.cell.com/iscience/fulltext/S2589-0042(22)01690-X

[47] Carroll, Sean. (2021). Something Deeply Hidden: Quantum Worlds and the Emergence of Spacetime. OneWorld Publications. ISBN: 9781786078360

.png)

Funny that I hated physics when I was a kid and now I love it! signs a cancer girl likes you